YouTube algorithms exposing young men to the ‘manosphere’: Reset Australia

Lobby group Reset Australia and the Institute for Strategic Dialogue have published research indicating that YouTube’s algorithm appears to be actively serving young men with videos containing hateful and misogynistic attitudes toward women that have the potential to lead young men into the ‘manosphere’.

According to Reset Australia, the term ”manosphere’ describes “a loose collection of movements” such as ‘incels’, Men Going Their Own Way (MGTOW) and men’s rights activitsts (MRA), that are “marked by their overt and extreme misogyny”. These groups are primarily situated online and herald an explicit rejection of feminism, with terminology and tactics that often overlap with other far-right extremist groups.

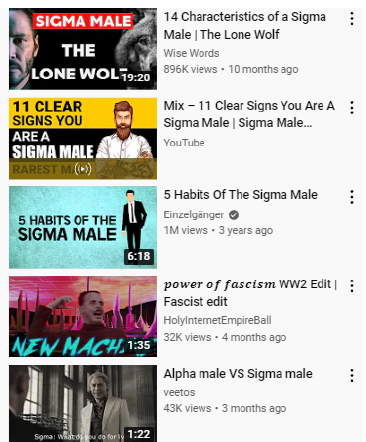

Screenshot of side-bar recommendations to the blank account for ‘Sigma male’ content in the Reset Australia report.

Such an important finding that rings so true. As a 22yo male, I’ve found I can’t visit YouTube without having ‘Feminism destroyed in 4 minutes’ or ‘Jordan Peterson dismantles female reporter on equal rights’ recommend as top choice for next video, even though I actively avoid any similar content. I was lucky to be old enough before having this shoved in my face, but have been concerned at it’s prevalence in my feed for some time, especially without clicking videos that could lead me down this path. Very dangerous for younger audiences.